How to shoot yourself in the foot with localized LLMs

A Comparative Guide to Localized Large Language Models

Local LLMs. They torch toes, drain GPUs, and crank fans, but they also deliver PMs freedom, privacy, and the thrill of running off-road experiments.

I spent a few weeks jamming open-source models onto an underpowered laptop. I learned two things: fans get loud, and toes are surprisingly shootable. Let me count the ways …

Local LLMs: The Foot-Gun Field Guide

GPT-OSS: Free bullet. Still costs you your foot.

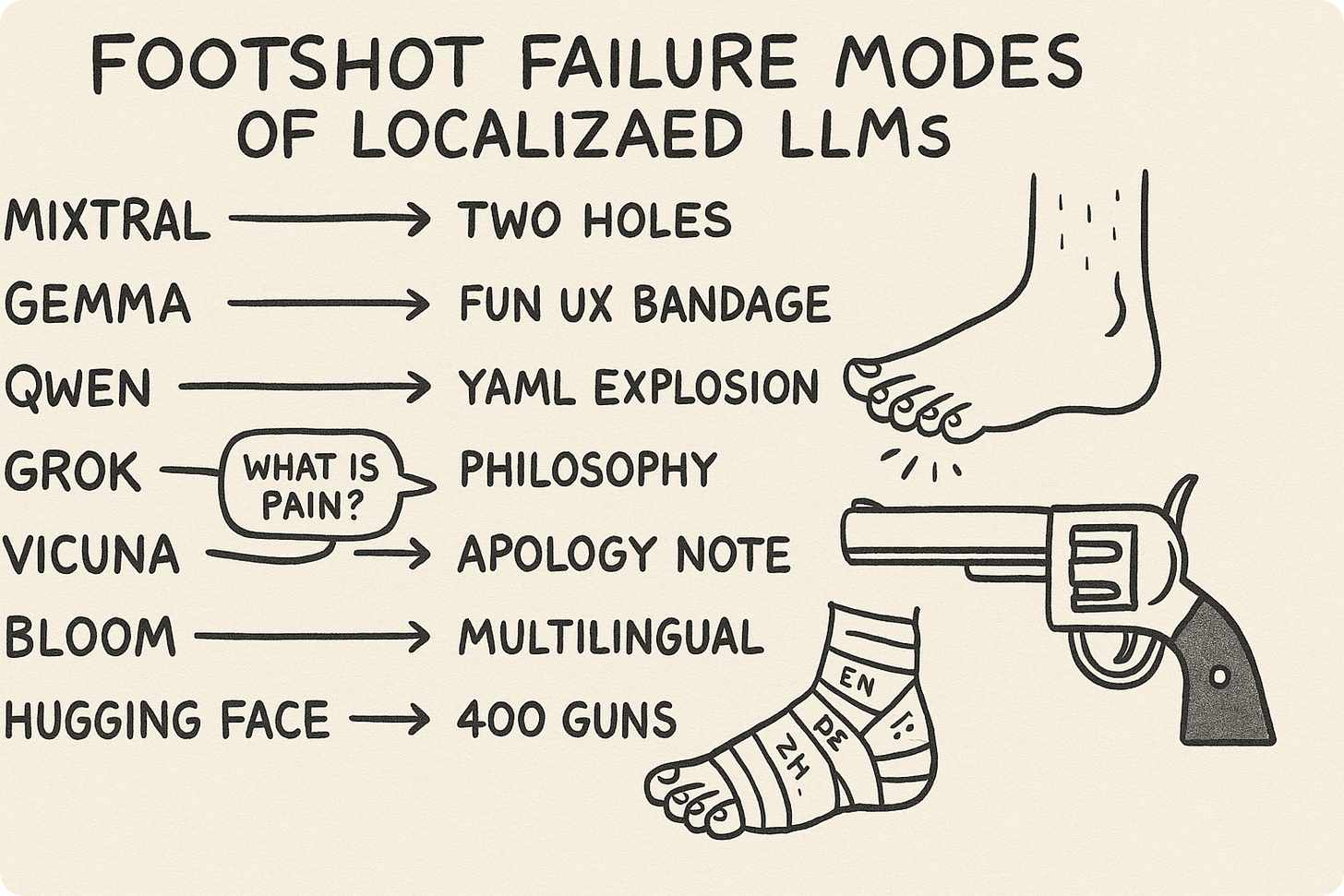

Mistral: You shoot your foot, but it’s so subtle, it’s artistically shot. Très chic.

LLaMA: License says “research feet only, no commercial toes.”

Grok: Shoots your foot, then philosophizes about the meaning of pain.

DeepSeek: Shoots your foot with uncanny efficiency, demands more compute by lunch.

Qwen: Footshot buried in 16 pages of YAML and a corporate SLA.

Gemma: You shoot your foot, but Google’s fun UI makes it a blast.

Vicuna: Footshot, plus a 500-word apology chat.

Phi: Tiny gun, cheap bullets, surprisingly accurate toe removal.

Falcon: State-of-the-art wound, delivered with desert precision.

Mixtral: Two guns at once ... six shots minimum.

LLaVA: Shoots your foot, then explains the wound in 12 annotated images.

BLOOM & Friends: Footshot in 46 languages, paper pending with 10,000 co-authors.

Hugging Face: 400 guns, weekend gone, but the community’s got your benchmark graphs.

Ollama: Shoot your foot locally, but forget which model did it.

Local fine-tune: DIY gun jams, but your foot’s sovereign and open-source.

Why I Still Love Local (PM edition)

As a product manager and recovering engineer, it was still worth the pain for the following gains:

Data safety: Sensitive corpora never leave my box.

Experiment Evals without ridicule: I can try weird ideas off-road.

Fine-tune my doom: Personal evals, custom data, honest failure loops.

Latency control: Milliseconds beat rate limits.

Cost clarity: GPUs are expensive, surprise invoices are worse.

Offline resilience: Planes, trains, client sites with bunker Wi-Fi.

Kidding aside, Product Managers of all skill levels should be exploring localized LLMs now, because they unlock two critical fronts: customer value and personal mastery.

For customers in highly regulated industries, local models offer a path to deploy AI capabilities without sending sensitive data to external clouds, easing compliance concerns and unlocking adoption that would otherwise stall.

For PMs themselves, localized LLMs provide a low-cost, private sandbox to experiment with prompting, vibe coding, and agentic workflows—free from API rate limits or runaway bills. Running models on your own machine creates a safer space to build intuition, test hypotheses, and push beyond canned demos, so you’re better equipped to guide teams and customers when the stakes are higher.

How Not To Shoot Your Foot

Start with the job: Pick one use case. Summarize or classify, not “do everything.”

Right-size the model: Small beats stalled. Quantized beats quit.

Mind the VRAM: Budget tokens, context, and batch like cash.

Read the license: Research vs commercial is not a vibe.

Log everything: Prompt, seed, quant, checksum, dataset slice.

Build a tiny eval: 10-30 gold examples, one score per task.

Sandbox your data: Separate secrets from scratch space.

Version your runs: Name your experiments like you mean it.

Put a kill switch on: CPU fallback, shorter context, smaller model.

Do tiny acts of discovery: One proof-of-life at a time.

A 10-Minute PM Smoke Test

Task: classify inbound tickets into 6 buckets

Models: tiny vs small vs medium local

Inputs: 25 real tickets, redacted

Rules: 1 pass, no hand edits

Score: accuracy, time-to-first-token, full-run time

Decision: pick the smallest model that clears 90% accuracy

Next: add 10 harder tickets, re-run, re-decide

Reusable Prompt Snippet

You are an exacting classifier.

Labels: {list of 6}

Return JSON: {"label":"<one>", "rationale":"<1-2 sentences>"}

If uncertain, choose "other" and say why.

Executive Translation

Local LLMs let us see how such solutions can solve security issues for some of our compliance-constrained customers. At a personal level, they let us test safely, cheaply, and privately. We trade vendor speed for control and learning. We win by narrowing scope, instrumenting runs, and iterating in hours, not quarters.

Takeaway

Local isn’t a silver bullet. It’s a sober scalpel. Point it at one job, measure, and keep your toes.

CTA: What did I miss? Add your favorite local foot-gun or survival tip in the comments.