Organizing on AI

It’s Not About Tools. It’s About Behaviors.

Most AI-First strategies don’t fail because the tech is weak. They fail because teams don’t reorganize around people, problems, and behaviors. Problem framing still matters. Outcomes still matter. Scope still matters. But AI forces us to think, and act, in entirely new ways. This is especially true as AI dramatically reshapes the role of the product manager.

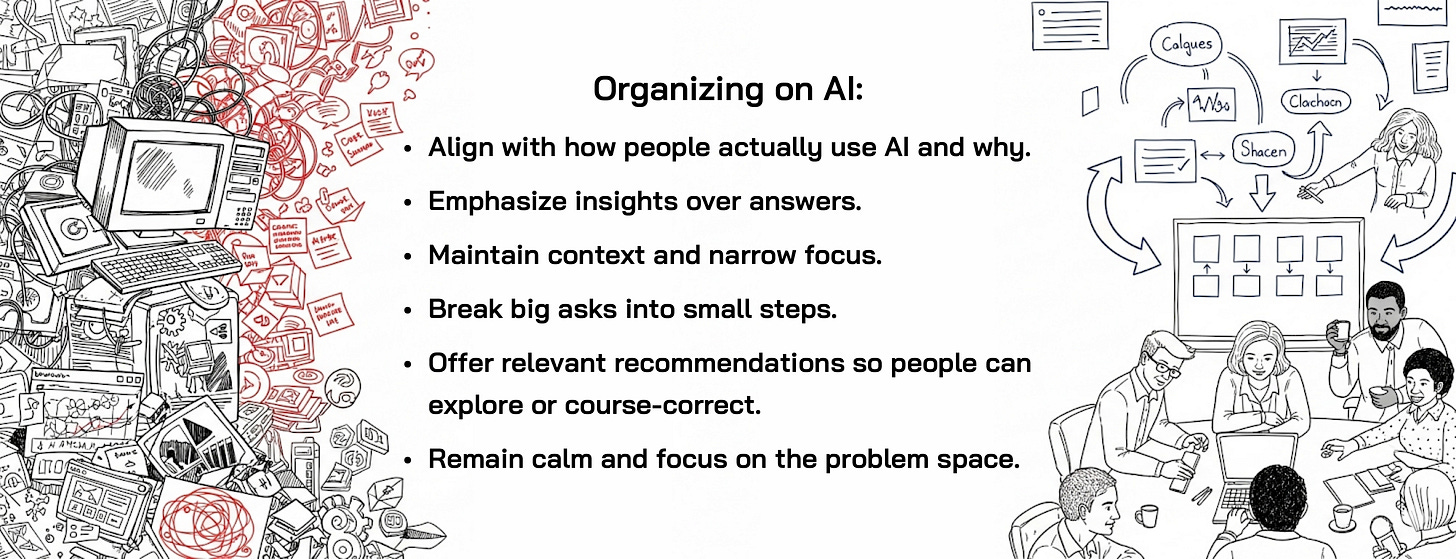

Organizing for AI requires us to:

Align with how people actually use AI and why.

Emphasize insights over answers.

Maintain context and narrow focus.

Break big asks into small steps.

Offer relevant recommendations so people can explore or course-correct.

Remain calm and focus on the problem space.

Back in 2011, Luke Wroblewski wrote ‘Organizing Mobile,’ and it changed how digital teams thought about design. His point was simple but profound: you couldn’t just shrink a desktop site to a smaller screen. Mobile had its own behaviors, and you had to design for them.

That shift, from porting technology to organizing around behaviors, defined the winners of the mobile era.

“Disruptive innovation doesn't always come with shiny gadgets. It's about rethinking how to solve problems and deliver value in ways that fundamentally shift the competitive landscape.” ~ Clayton M. Christensen (1952-2020), Management Professor & Author

From Mobile First to AI-First

Fast forward to 2025. Just as “mobile first” forced us to abandon lazy desktop ports, “AI first” forces us to abandon lazy bolt-ons.

You can’t just slap “add chatbot” on the roadmap and call it a strategy. That’s how you get Frankensoft, a hodge podge, stitched-together mess of features that look impressive in a demo but collapse in real-world use.

Why? Because great AI isn’t a retrofit play. It’s a reinvention bet.

And the reinvention starts not with models, vibe-coding tools, evals, agents, APIs, or tokens per prompt, but with behaviors. How people use AI. How PMs and engineers enlist AI. And how those two layers interact.

The Two Layers of AI Behaviors

Wroblewski grounded mobile design in user behaviors: lookup, explore, check-in, edit. That worked because mobile was about micro-moments in daily life.

AI is different. It operates at two levels.

Builder Behaviors — how PMs and engineers use AI to build AI

AI isn’t just a product surface. It’s the workbench and the welder. Product managers and engineers now enlist AI to collapse discovery cycles, stress-test assumptions, and prototype futures. These builder behaviors follow a pattern:

Search: AI researches the market, competitors, and user problems. Take the Atlassian AI Marketplace: Teams enlist GenAI to scan competitor release notes before roadmap planning sessions. AI can also cluster customer complaints or surface neglected market segments to reframe the problem space.

Synthesize: AI connects disparate data into coherent insights. Think of a banking product trio feeding in NPS comments and call center transcripts. The model clusters themes faster than any analyst could.

Simulate: AI role-plays stakeholders and user types to anticipate reactions. Picture a healthcare team using GPT to act as the compliance officer and surface likely objections to a proposed feature. AI can also simulate persona interviews to stress-test assumptions before running real ones.

Scaffold: AI builds structured artifacts like strategy docs, PRDs, or canvases. Instead of staring at a blank PRD, PMs enlist AI to generate a first draft that they refine with the team.

Show: AI generates prototypes and mockups that let teams see the future. Klarna’s AI shopping assistant was prototyped in Figma with AI-generated user flows before any code was written.

Sell: PMs package the case for sponsorship, funding, and GTM. An insurance PM enlists AI to build scenario-based business cases that help leadership see cost savings and new revenue potential. AI can even generate stakeholder-specific versions of the pitch: exec summary, engineer deep-dive, customer-facing explainer.

User Behaviors — how customers experience AI in their IRL problems

On the other side are the end users. Their needs don’t map to LLM jargon or MLOps diagrams. They’re hiring AI to get something done:

Decide: “I need clarity from overwhelming data.” Crowdstrike surfaces anomalies to security analysts so they can act fast, not dig through logs.

Generate: “I need new ideas or options.” Duolingo roleplays conversations to help learners practice without judgment.

Monitor: “Keep me updated when things change.” Supply chain PMs rely on AI alerts for shipment delays instead of manual tracking.

Automate: “Take this task off my plate.” Inspektlabs auto-checks car damage photos so adjusters don’t spend hours per claim.

The challenge, and the opportunity, for product managers is to connect the builder behaviors to these user behaviors. Otherwise, you end up shipping scaffolding tools instead of usable products.

The Ten Motions of Organizing on AI

So how do we turn principle into practice? Here’s a playbook PMs can run to keep themselves, their teams, and their AI initiatives organized.

1. Remain Calm and Focus on the Problem Space

What problem are we solving, for whom, and why now? Anchor in market and competitive reality. Take the case of an exec who demanded “AI in onboarding.” One PM reframed: “Are we solving for drop-offs during sign-up or for better sales conversions?” That question turned hype into clarity. AI can also cluster customer complaints to reveal hidden pain points that sharpen the problem space.

2. Feel Their Pains, Learn Their Gains

Apply JTBD to clarify what job AI is being “hired” for. Duolingo’s Max didn’t say “let’s add AI.” They asked: “How do learners get unstuck?” Roleplay mode was the JTBD answer. AI can also simulate persona reactions to pressure test assumptions before committing to research sprints.

3. Stake Out a Winning AI Playing Field

Pick your quadrant: automating systems, streamlining workflows, generating content, or suggesting actions. Klarna’s AI Assistant focused on “recommend and transact,” not “replace shopping.” It worked because they picked the right field to play in.

4. Stand on the Shoulders of Giants

Borrow confidence from proven plays in other industries. A hospital PM learned from ABB’s industrial AI: if ships can predict engine failures, why can’t we predict patient no-shows?

5. Demystify Your Data Strategy

No data = no AI. Bad data = expensive failure. A STAT report suggests Watson Health collapsed because it trained on too little oncology data. By contrast, Netflix thrives because it continuously feeds its recommender fresh viewing signals.

6. Unpack Assumptions and Unknowns

Derisk early. Stress-test internal assumptions and external forces. A fintech PM assumed “users will trust automated credit scoring.” Early tests showed they wouldn’t until transparency and appeals were added. AI can run adversarial tests to probe for bias and edge cases that humans might miss.

7. Build on Tiny Bets for Big Wins

Prototype small. Validate fast. Lemonade AI tested claims processing behind the scenes before exposing it to users. An AI simulation based on synthetic data de-risked adoption. AI can also automate rapid test design or animate cheap simulations so teams explore consequences before committing.

8. Mind the Monetization

AI is costly, so plan how it pays before scaling. BloombergGPT’s custom LLM burned money with a fine-tuning play without beating GPT-4. By contrast, GitHub Copilot was monetized through developer subscriptions that tied directly to productivity gains.

9. Socialize the Story

No AI product sells itself. You need narrative and framing. Microsoft’s AETHER ethics framework wasn’t just governance. It was a story they could sell to customers about responsible AI. Teams have also enlisted tools like OpenAI Sora or Google Veo to create explainer videos, helping de-risk adoption. AI can generate tailored versions of a pitch for different audiences to amplify influence.

10. Deliver an Outcomes Blueprint

Summarize into a crisp, testable recommendation. A PM pitched a one-page AI canvas: persona, pain, play, data strategy, tiny bet, and expected lift. Execs didn’t just nod, they signed off.

"The best innovations are often the simplest ones. Sometimes, all it takes is to reimagine a familiar problem and come up with a new way to solve it. That's the essence of disruptive innovation." ~ Liu Qing (a.k.a. Jean Liu), president of Didi Chuxing

Why Organizing Matters

In 2011, “organizing mobile” meant respecting the constraints of small, intimate screens. In 2025, “organizing on AI” means respecting the complexity of large, non-deterministic systems.

Both required humility. Both rewarded clarity.

Skip the behaviors, ignore the problems, bolt AI on like a shiny accessory... you don’t get transformation. You get Frankensoft.

And no PM wants to be called into that post-mortem.

Next Steps

I teach AI Product Management at Productside.com where you’ll learn more about what I’ve written about here.

Reach out and connect with me, I can get you connected; and in some cases, possibly get you a discount.

For the rest of you, leave comments. You know you want to.

So agree on with aligning on how and why people use AI. Too many PMs are giddy with cool tools right now.

From the guy who's constantly saying, "Hope is Not a Strategy" we get

"You can’t just put “add chatbot” on the roadmap and call it a strategy."

Yeah, I'll just leave that there.